Social challenges of AI

Introduction

Artificial intelligence is a hot topic, and is becoming more and more used. This poses interesting social challenges. Others have written about some of these issues, indeed techdirt, EFF,… care about these things, and more general technical oriented outlets pick them up from time to time.

Artificial intelligence (AI) is a broad topic, we will look mainly the issues connected with Machine Learning (ML) and Statistical Learning in general. This is also the field where we had most of the recent advances, that brought AI in the public eye.

The effects depend on the domain of application, and range from the absence of tact, and sensibility to social consequences, to matters of life or death. Understanding the consequences can be difficult, even when applied to seemingly inconsequential things like marketing and suggesting interesting articles it can have serious consequences.

Many of these issues are not new, machine learning tries to emulate our learning process, and can fall into many of the same pitfalls that affect us, sometimes with some extra challenges given from the new settings.

Even without any kind of AI we effects that range from mundane (having to fight against an overwhelming tide of cow themed toys and objects), to more serious (radicalization and racism).

After looking at some unexpected effects of the application of Machine Learning, in the last part I will focus on an issue that while already discussed by others, I feel was not properly addressed: how even Machine Learning can be racist and why it is bad.

Statistical learning and machine learning

When, in the 50’s-60’s, artificial intelligence started as a field of study, it was marked by optimism, however some problems like image classification turned out to be much harder than expected. Today there is again a lot of discussion on artificial intelligence, connected with successes with several of these difficult problems. This is due a series of breakthroughs brought by a combination of large amounts of data, faster computers and better algorithms (like improvements to stochastic gradient descent to optimize neural networks).

If one looks carefully these improvements are actually mainly improvements to machine learning (ML). That means that we are better at recreating complex statistical distributions, for example, using the flexibility of iterated functions (as in deep neural networks). This is a significant development, but it isn’t magic. It has nothing to do with the development of the logical, analytic abilities, we often associate with computers, but rather the ability to better grasp and reproduce complex statistical distributions.

As often the case with new technologies, machine learning (ML) too can be seen as a new set of tools. It makes many interesting applications possible, but, to go back to our subject matter, it isn’t intrinsically good or bad. To tackle that question we have to consider which consequences these applications bring with them.

These consequences aren’t always obvious, especially given that the model produced is often difficult to interpret, as it only tries to reproduce the (often complex and difficult to interpret) statistical distribution of the data.

Low stakes applications of AI

Congratulations on your new child

With every credit cards, banks or shop’s loyalty programs, we implicitly agree to the collection of a lot of information about what we buy (and much more), information that can be mined for marketing purposes.

There have been stories about Target (a large retailer in the United States) predicting pregnancy (interesting as it triggers many purchases) before the father knew (Forbes, BusinessInsider). It is unclear if the story is true, also because marketers know that the one might want to hide the pregnancy, so they normally aren’t too obvious about using this information.

Still the pitfalls connected with using this kind of information are real: What if the woman is hiding her pregnancy, because it isn’t the son of her partner, or she simply hasn’t told him yet. What if she lives within a very strict family that would not accept her being pregnant out of wedlock? What if she was pregnant, but she lost the child?

These examples show that even “just for marketing”, the consequences might be serious.

More of the same please

A case that seems to be even more harmless is trying to help the user find more similar or interesting content, using the choices done in the past. Given the huge amount of content available on the internet this seems very good and useful, but there are several serious pitfalls.

- It can make very objectionable content quickly reachable: For example, recently YouTube had to perform a large intervention, after Matt Watson showed how in 5 clicks one could reach what he defined as a “wormhole into a soft-core pedophilia ring on YouTube” (the verge, arstechnica, techcrunch). YouTube acted quickly, disabling comments, and lots of accounts and videos, also because advertisers boycotted Google as they did not want to be associated with pedophiles. In this case the suggestion algorithm shows the problem that moderation is hard (republished from the EFF deeplinks blog)

- The more worrying thing is that it can distort the world view of a person. How much depends on the algorithm used, at worst it shows only related things, and most likely it will at least continue to bring up some things related to it. The consequences of this vary, from being exposed to continuous reminders/photos/… of an ex loved one after a breakup, to continually being shown extremist material/propaganda after having looked at it once, contributing to radicalization.

The distortion of the world view can happen at various levels also in real life. It can be mostly harmless: I had a friend that had for a long time a plush cow meant as a funny present for someone else in his car. It was quite conspicuous so many thought he did like toy cows very much, and he began to receive cow themed toys and stuff, because everybody thought he did like them, and seeing more and more of these it seemed to confirm his liking. Now he didn’t dislike them, but neither liked them very much.

The apparent consensus or relevance of some arguments shown by suggestion algorithms in facebook, twitter,… can have more serious consequences. Parents that worry about the “bad company” of their children worry about this. Sometime the effect is exaggerated, but the environment and suggestions definitely have some effect.

In all these cases (just as on the internet), one does not have to follow what is suggested, ultimately I think the individuals are still responsible for their choices, and there are persons that can and do push back against this. And that is exactly the point: there is a push in one direction, and it takes effort to push back, so these things do have an effect. In real life one knows what he should do to get away from such influences, it might be difficult, but we have examples showing possible ways out. With opaque algorithms that never forget it can be less obvious, one might not even be aware of the issue.

This compounded by the fact that the goal the algorithm is optimized for often is not the long term usefulness for the user, but “engagement” to ensure that the user stays more and is more active, and sees more advertisement, and thus produces more return on investment for who runs it (Facebook, twitter, google,…). The problem with that is that the more extreme are the things, if they polarize it, then it is more likely that to have a reaction. Thus, optimizing for engagement pushes one toward polarization.

Right to be forgotten

The right to be forgotten, about which I feel a bit conflicted, is quite apt and non controversial in this case. One should be able to either clear his data, or create a new account from scratch and free himself from strange/bad/annoying consequences of his choices (for example about what he had looked at or bought). Creating a new account has a cost (loosing the direct connection to the work put in the previous identity), so it might avoid excessive abuse.

I understand the idea of giving another chance (in literature these have inspired a number of novels, among which I particularly cherish “Les Misérables”), or forget about and get over the “childhood/youth mistakes”. Still, I am not too sure how to do it and where to put the line between freedom of expression (limited by the right to be forgotten), accountability and the right to start again from a clean state. The dark side of the right to be forgotten is its use to censor (often to hide the deeds of corrupted politicians), especially if it forces search engines and social sites to effectively build a censoring tool (to avoid the “things to be forgotten”).

Malicious use of an AI

Never ascribe to malice that which is adequately explained by incompetence Robert Heinlein

Until now we have discussed unwanted or unexpected harmful effects of AI. We should avoid seeing too much intentionality in every action and fuel conspiracy theories.

Still, one can use AI for malicious purposes. Often these use AI ability to influence people.

Marketing and advertisement do try to do it, but we have laws limiting it. Thanks to them, and the fact that advertisement is an open attempt to sell something, it constitutes a sort of training to identify and resist manipulation attempts by others. However there are more disturbing uses of AI than the “just selling something” described up to now.

In a recent experiment on Facebook, published in 2014 attention was drawn to the possibility to influence emotions on a large scale.

As proven in other contexts, election manipulation is not a new phenomenen, and the “electronic part” is just a new side of it with more or less licit means, as shown by Andrés Sepúlveda first, the scandal involving Cambridge Analytica later, and the talk of foreign interference on the 2016 US elections.

Such a contentious theme, needs much more space to be discussed.

Higher stakes use of AI

Other uses of AI that have been envisioned, uses that can affect life in a more direct and dramatic way. Extending the consequences of applications involving marketing or suggestion algorithms, one may reasonably fear that the larger are the stakes, the worse are the pitfalls.

Indeed, machine learning can be used to screen people, for insurance, financial, or work reasons.

Police and border control have expressed interest in AI technology early on. Part of it is to improve surveillance and identification. Facial recognition software is increasingly used in USA, Russia, China, UK, and many other countries are trying to build up a monitoring infrastructure. The issue in this is the erosion of the private space, and privacy an issue we will discuss later. The step from police work to population monitoring and dissent control is not so large, and some have already taken it.

ML for example in the USA has been used to evaluate the recidivism likelyhood and thus contribute to the decision about liberty or incarceration. Challenges and issues in this (and what is labeled as “evidence based “) have been discussed by others:

- Larson et al. for the COMPAS (Correctional Offender Management Profiling for Alternative Sanctions) algorithm,

- Stevenson wrote a very detailed and stimulating review of risk assessment in general. It inspired a simpler and shorter article on arstechnica that is worth reading.

China is evaluating the use of BigData analysis in its social credit system.

Malicious manipulations of an AI

Any system can be manipulated by malicious users. AI can be affected by this, as when Microsoft chat bot Tay started making racist remarks and had to be removed after less than 24 hours of operation (The verge, arstechnica).

The issue there rather than racism of the bot, was that the bot was easy to manipulate, and racist people did try to take advantage of that (by making it repeat racist sentences for example). The Chinese version Xiaoice was and is much more successful (and is still working), but the attempt to bring it back in English blocking all themes that could be in any way controversial was not so well received, and has now been terminated.

Manipulation by malicious users is a serious issue, that needs to be addressed. This can be overly complicated by the fact that a norm or “correct” behaviour has to be defined along with a melevolent one. Power and interests, censorship and propaganda are all elements that have to be discussed deeply and in the most inclusive way and definitely exceed the scope of this article. I will only discuss a bit the incorrect representation of the distribution due to adversarial changes.

Bias

The issue of bias has been discussed in a interesting article on arstechnica.

The article rather quite short and explain how machine learning (ML) just cares about picking up patterns, and can pick up racial bias (even indirectly through other features that correlate with race, thus making it difficult to avoid), discusses examples of bias. Still, while the article is well written, it comes short on explanations, in my opinion, simplifying many points with the assumption “garbage in garbage out”. This simplistic view does not do justice to the gravity of having such bias and the difficulty of avoiding it.

The episode “Are we automating racism?)” of Vox series Glad You Asked This, is a better introduction to the issues I want to tackle below and their consequences.

Racism

Here I want to concentrate on an issue that as a society we have recognized as bad, and we still struggle with: Discrimination and racism.

I want to discuss discrimination and racism by the algorithm itself.

Some think that preconceptions, racism, xenophobia,… stem from group dynamic, that one wants to belong to a group and see the enemy in others. That maybe they even have an evolutionary component. Group dynamics and social aspects are real, but I do not think that the are at the origin of preconceptions,… but rather that they can support and take advantage them.

Racism at its core should not be fought and refused just because it is bad or wrong, but as well, as I will try to show simply because it is a stupid mistake. One that it is easy to make and difficult to avoid completely.

The core of racism stems in my opinion from preconceptions and errors in the models we build. We need to classify and simplify the world to understand it, but this can lead to incorrect deductions.

These can often be just harmless preconceptions like “Swiss people are punctual”, but these are nonetheless the seed of racism. Unfortunately, it is difficult if not impossible to fully avoid it. We need a working model of the world to know how to act…

While algorithms are free from group dynamics and evolutionary pressure (unless explicitly programmed in it), and thus be free from feedbacks that can reinforce racism, they can still can succumb to this kind of mistakes.

I divide the errors (excluding logical mistakes) in two main classes: generalization / simplification errors and slicing errors.

Generalization / Simplification Errors

Generalization and simplification errors stem from simplifying too much the model, or extrapolating it to cases where it does not apply.

Machine learning has a part that has an effect similar to the generalization/simplification in our thinking. This is what controls the complexity or smoothness of the representation.

Machine learning can make generalization/simplification errors, but I have the feeling that in general, if done properly, it can or it will be able to cope with them maybe even better than us. This for a couple of reasons:

- Machine learning has often more data than us (we tend to extrapolate instinctively even with very few/no data points) and then we get attached to our faulty extrapolations

- There are methods (cross validation,…) to verify, and (depending on the method more or less easily) optimize the amount of simplification/compression/regularity applied to the amount of data available (with little data the models need to be simple with lots of data it depends)

- We can also try to predict the variability of the distribution, or trust in the model, along with the model, thus having at least some idea on the amount of error expected in the prediction. It is still an open research problem (especially with neural networks), but there is progress.

There is still a lot of ongoing research, and there are cases where things can go wrong (discontinuities for example) but on the whole I am pretty optimistic.

Please note that just because it is possible to handle this problem in a reasonable way it does not mean that it has been done so in a specific application, it is still a difficult problem, and one should be vigilant.

Slicing errors

I call slicing errors, those errors that stem from the mismatch between the kind of data used and the problem at hand. This is a much more insidious problem.

An example can help visualize all the kind of errors I want to discuss about.

Doctor I broke my leg…

Think about going to a doctor, with a broken leg. The doctor doesn’t look at you or ask you what is wrong, he just asks you to fill out a form. After that, he treats you for carpal tunnel syndrome, because given the age, job, sex and address you wrote in the form, this is the most likely treatment that you need.

This case shows all the issues and complexity of discrimination in a noncontroversial setting (discussions on discrimination, racism,… get polarized easily):

- Correlation does not imply causation.

Indeed, if one looks at many uncorrelated features, at some point it becomes statistically likely to find at least some correlation.

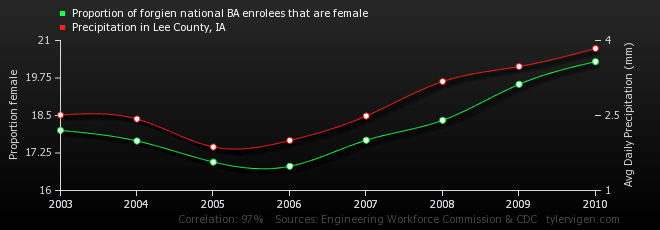

Funny and informative examples of this are given by Tyler Vigen’s spurious correlations

- There might be a real relationship, maybe through side channels. Working with a computer a lot can increase the probability of having carpal tunnel syndrome, but the incidence is pretty low. Other occupations (miners for example) can have a much stronger link to specific illnesses: there are plenty of work-related diseases. Likewise age can definitely contribute to some illnesses (Alzheimer, dementia,…).

- The model might really improve. Using this information does increase the accuracy of the prediction, with respect to not using it.

Despite all this few people would go back to the doctor of the example above. Mostly because instead of asking the patient, looking for symptoms and then checking the leg, maybe do an X-Ray, he tries to get the information mainly from data that often (and in this specific case absolutely) isn’t relevant for his diagnosis.

There is a saying if everything you have is a hammer, everything looks like a nail. The data that you have, the kind of features used to describe the model shape your view of the latter.

This is one of the main sources of racism. Some kind of data is easy to collect: age, profession or color of the skin. Having this data there is a pressure to use the classification of the people along those lines, even if it isn’t relevant.

At first glance slicing errors do not seem like a big issue: Even when using not so relevant data one can look at the statistical distribution along it, and try to reproduce it. Using the projected probability distribution will be better than random guessing.

To understand the issues in this let’s look at the ideal case where one can create the best possible model given the data.

Perfect projected probability distribution

Let’s assume that everything (apart the choice of the features) is perfect, and one uses the projected probability distribution simply to know where to look, for example doing a number of checks proportional to the probability of finding the target in that subgroup. This is the optimal approach given the information that one has, it is also something that seems natural to do: looking at suspicious things more than at the non suspicious things, for example. If done properly, it is not biased in the mathematical sense, and there are cases in which it can be acceptable (in an exploratory phase for example). Unfortunately, it can still be awfully insufficient and have serious drawbacks.

Going back to the example of the Doctor, it is important to note that even if the projected probability distribution is reproduced perfectly (i.e. assuming a perfect model for the data) using the occupation instead of more relevant data skews the results. The strength of the link (and thus the quality of the prediction) depends on the occupation, and this opens the door to discrimination. It is not just that some occupations will be treated worse because there is no strong link to an illness. Think about a sickness with a treatment that has heavy side effects, and can be misdiagnosed. With this approach, people doing that profession will also be more likely to be subjected to the side effects due to a misdiagnosis. It is to be expected that those persons will care more about misdiagnosis and coping with the bad side effects of the treatment.

Compounding this there are other issues that are typically present.

Incorrect representation of the distribution

Previously we assumed that the algorithm faithully reproduces the projected probability distribution. This is rarely the case, indeed there is always an error, and it isn’t necessaily uniformly distributed across the various cases. The learning algorithm can distort the probability distribution, it tries to get the most important features, and the impact in a given minority might be sistematic (either over-estimating or under-estimating the effect in it).

An approach often championed by big data to cope with this is to try to add more data. The relevant data will then be surfaced, or so goes the theory. When the prediction error really becomes negligible this is indeed the case. But when the error is maybe small but non negligible, the goal is normally to improve the average prediction, possibly even at the cost of a worse treatment of some minority. To make matter worse that minority might even be sampled insufficently. These issues explain the danger of adding data that is not tailored for the problem.

Then there is the human interpretation of the data. A high probability is often considered as if it would be completely true (1), and likewise a low probability is considered equivalent to 0. This skews the actual use of the results toward a harsher distribution.

Distribution changes

Even if the algorithm predicts the past probability distribution perfectly, it does not mean that it will predict the future probability distribution too. Indeed if there is no causal relationship between the features (data) used for the prediction, and the predicted quantity, the probability distribution can easily change after you learnt it and decisions are based an outdated model. This can happen in two ways:

Unexpected changes

Think about a place with a water source easily contaminated by rain and sewage water. Expecting cholera in someone living there might be reasonable, and ML can pick it up, suggesting cholera for people living there. Now imagine that finally some sanitation is performed, sewage system, disinfection of drinking water. The incidence of cholera will drop to almost nothing, but the model will still think that people living there have a high probability of having cholera.

This happens because the model did not use “the quality of water where the person lives” as feature, but simply “the place where the person lives”. Without a direct causal relationship (the quality of water, not the location causes cholera), change becomes possible.

With behaviours and cultural constucts this can happen quite quickly, and one decides on outdated models. Also people can cling to outdated clichées, but one can try to argue with a person.

Adversarial changes

If there can be a payoff in gaming (tricking) the system one can try to adapt his strategy to optimize his chances of having a result close to the one wanted. Imagine an illness treated with drugs used recreationally (and possibly addictive). If a given occupation was linked to it, some people might take up that profession to be more likely to be prescribed that drug.

This drastically changes the real distribution from the one that the algorithm assumed. If the algorithm is leaked one can train on it, optimizing his choices, to have the desired result (a misdignosis of the illness to get the drugs). Whether/How much it happens is connected with the difficulty of changing the data the algorithm looks at and the payoff for fooling the algorithm.

Lockout

A model may be incorrect due to several reasons, due to being outdated, due to simplification errors, or even simply due to a bad feature choice (as in the example of the doctor). No matter the reason the mismatch can have some effects, when it is used to decide on something that affects the persons themselves. It might have the effect of pushing a person toward the expected distribution, in particular excluding people.

This is one of the worst effects of racism, the segregation and discrimination.

This kind of feedback loop can happen in several situations. In a procedure to screen to get a loan, refusing the credit to a group of people makes it more difficult for them to improve their situation. If the algorithm is connected with punishment, it can push people incorrectly targeted to “do the deed to get its benefits, as they get the punishment anyhow”.

The exclusion doesn’t have to be absolute, to do damage. I was struck by a sentence of W.E.B Dubois in Criteria of Negro Art that I read on kottke:

when God makes a sculptor, He does not always make the pushing sort of person who beats his way through doors thrust in his face. W.E.B. Dubois

It is emblematic of the loss to society, and the harm to the individual of these mistakes.

That is the reason that it can be better to avoid using data connected to the race, even if the model is less accurate to avoid discrimination. This can also force one to be less lazy, and to use more relevant data.

Privacy

There is much talk about privacy (or the lack thereof), and indeed privacy is a large and complex issue needing much more space than this modest article or paragraph. Here, I just want to rise a few points.

There is more and more data on us and there are many actors interested in it.

The recent GDPR legislation has issues, but it made nonetheless the effort end large amount of code and processing power devoted to trying to monetize our attention and behaviors more visible.

A pervasive monitoring removes spaces where new and different ideas can grow without being subject to public scrutiny before they are ready. I suspect such space is very important for a healthy and democratic society.

The best way to ensure this is not so obvious, some real competition can be more effective than prescriptions. On this aspect the GDPR despite the good intentions came up short. Also the copyright reform recently discussed in the EU (article 11 and 13) risk reducing the competition and increasing censorship.

Moving toward services and protocols, by forcing interoperability and environmental/pollution inspired laws (see 1, 2, 3…) seem a better approach to protect privacy.

Connected to the right to be forgotten, good privacy should ensure that some data is not collected, or at least discarded after some time. Data has a weight, as described in Sustainable Data, by Jan Chipchase, and if it is collected there is a pull to use it. Thus good privacy also helps to counter the temptation to use the more easily collectable data, but probably not so relevant to the question asked, and thus easily discriminatory.

A strong privacy also makes several kinds of misuses by the AI more difficult and expensive.

Opacity

A big problem of AI in general is its opacity, inspectability and appeal options for something that has important consequences for the individual affected. Open documentation of all the data used for a decision, the decision taken and a version identifying the program/model used should be mandatory. This is also similar to what we do for judges.

Ideally all aspects of the procedure should be given. This includes the code, which should thus be open source.

Openness makes malicious manipulations and attacks simpler, but security by obscurity is never good in the long run. Still sometime there may be good reasons to keep the code private.

Even with all the data and code, statistical learning does focus on reproducing a statistical distribution, which is an abstract object, difficult to reason about. Thus it is often difficult to explain the reason for a decision (hence all the research on explainable AI). This is a weakness, but it isn’t something totally unknown, also human decisions are often not fully explainable.

Appeal options are also a complex topic, a big part of the damage of discrimination is informal, and the use of AI for screening reasons as “informal consultant” might fly below the radar, and still inflict damage.

Conclusions

Machine learning is being used more and more, and things like better automatic translation between different languages, being able to quickly search the whole internet (or a good approximation of it) have for sure enriched our society.

Still, as we have shown, there are some non trivial issues with its use. Algorithms can filter and subtly influence the reality that we perceive. Most importantly, discrimination, something we have struggled with for a long time, and still struggle with today.

Machine learning cannot solve the underlying problem of using wrong or insufficient features to model a problem. This can happen also to experts, as was shown for example by the discrimination of women by a screening tool that Amazon tried to develop.

Starting from a purely statistical view, machine learning has its limitations. Statistics do not cover everything, for example, it cannot really think about the consequences of choices or predict new behaviour. Ironically, despite all the “disruptions” connected with it at some level machine learning is ill equipped for the new.

Now historically also we humans have also struggled, and still struggle with these problems. So it isn’t that AI has completely new problems, but currently unless extra effort is done AI can be more opaque. How to keep accountability, identify bias, provide appeal options when AI is involved in important decisions is still an open question, but making the procedure as open as possible, or provide alternatives is probably the best idea.

It would be nice quickly to identify the good persons just based on some simple attribute. With time we learn that reality is more complex: how a person is if something that is hidden in her thoughts, we can try to figure it out from her actions and what other think, and anyhow an absolute good or bad rarely fits.

Not everyone can become a great artist; but a great artist can come from anywhere Anton Ego

Society will be better and richer if all those that can contribute and enrich it do. Now real life is messy we cannot have a perfect utopia, still I feel that we progressed toward a better, democratic, multicultural society, where we try to give to everybody the same chances, but it is a never ending struggle, and we need to push on.

Part of the messy real world is, as always how to cope with the intolerant, discriminating, and racist: Carl Popper’s “how tolerant can you be with intolerance”. I do not know where exactly to put the line, but the answer is definitely not very much tolerant. The hard part is how to handle the intolerant. Sometime one should dismantle the arguments of the intolerant, sometime just avoid to “feed the troll”.

Nowadays, several people try to use racism, for political gain. Polarization is used as a mean of increasing the engagement of their supporters. So one might be skeptical about finding much support against discrimination.

I am not so bleak about this, because as I tried to show we shouldn’t fight this just because “it is bad”, but simply because it is a stupid mistake (albeit easy to make), and correcting it has real concrete advantages:

- For a firm hiring a real talent that would have been lost otherwise

- For a bank giving a loan, getting the money back and doing profit

- For society as a whole, richer and more diverse contributions, and probably more happiness in the end.

Now we just have to guard ourselves also toward discrimination from AI, because it is the smart thing to do…

On this article

Artificial intelligence and machine learning pose have several interesting technical challenges. Normally, working in the field I am mostly interested in those. There are also challenges that affect and should be discussed with the broader society. Social challenges are not less interesting than the technical ones.

I thought about them for a long time, indeed, I started thinking about some things when, immediately after my diploma, I worked at Uptime, a firm that archived documents, including few GB of receipts of Migros per week. My latest work didn’t have any real ethical issues, as it uses simulation data about materials, not any kind of personal data. Nonetheless, I stayed interested also in the bigger picture.

I felt that an important part, especially concerning AI discrimination and racism was missing. Hence I have tried to explain the major issues I see, and maybe suggest some possible approaches to tackle them, hoping to advance the discussion on this important topic.

I want to thank my brothers Akram and Semir for constructive criticism and proofreading, he improved this article, but any remaining errors are obviously my own fault.